POFL PROTOCOL

--Decentralized AGI at Your Fingertips

Experience CerboAI's revolutionary approach to AI. CerboAI delivers personalized Edge AI models directly to your devices, ensuring data sovereignty and local inference. Securely contribute your data, earn tokens, and access your on-device AI copilot that intelligently integrates with your apps, offering Web3 capabilities and AI-generated content.

Pofl protocol

CerboAI's Edge AI runs locally on your device, offering personalized intelligence without compromising privacy.

Data Sovereignty

With CerboAI, your data stays on your device, ensuring complete control and security in AI interactions.

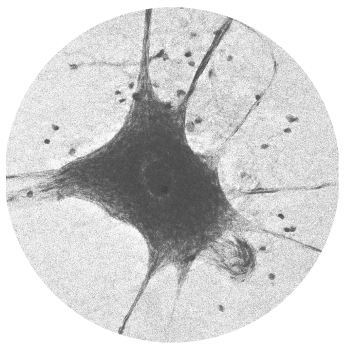

Federated Learning

Contribute to a decentralized AGI network while maintaining data control with CerboAI's innovative federated learning system.

Personal AI Copilot

Ghost, your CerboAI assistant, seamlessly integrates with your apps for a tailored, intelligent experience.

PoFL Technical

Indicators

With POFL technology, we have achieved new

advancements in the following five directions.

TRAINING

ENERGY COST

1.Distributed Computation and Efficiency

Traditional fine-tuning depends heavily on centralized computational resources. Data and model updates must be transferred to and from a central server, which can be inefficient and time-consuming. Federated Learning, on the other hand, distributes both data and computational tasks. Each client performs training locally, and the central server's role is limited to aggregating the model parameters rather than handling raw data or performing heavy computations. This distributed approach not only improves efficiency but also reduces latency and the need for extensive data transfer.

2.Enhanced Privacy and Security

Traditional fine-tuning's reliance on centralized data poses significant privacy risks. By aggregating sensitive information in one place, the risk of data breaches and privacy violations increases. Federated Learning addresses this issue by decentralizing data ownership. In this approach, each client retains complete control over their own data. Training occurs locally on the client's device, and only model parameters are shared with a central server. This means that sensitive data never leaves the client's device, significantly enhancing data privacy and security.

···For example

Differential Privacy, DP: An algorithm M provides (δ,ϵ)-differential privacy protection on any adjacent datasets D and D′ if for any output subset Ω, the following holds:

Pr[M(D)∈Ω]≤eϵ⋅Pr[M(D')∈Ω]+δ

3.Scalability and Flexibility

TWith traditional fine-tuning, scaling the training process often requires significant infrastructure investments. Federated Learning inherently supports scalability by leveraging the computational power of distributed clients. As the number of clients increases, the system scales efficiently, without placing undue strain on a centralized server. This flexibility allows for faster and more scalable training processes, accommodating a wide range of AI models and applications.

4.Incentivization and Fairness

Traditional fine-tuning methods generally lack mechanisms for incentivizing contributors. Federated Learning platforms often integrate blockchain technology to create transparent and equitable reward systems. Contributors are recognized and rewarded for their participation and the quality of their updates, ensuring fair distribution of incentives and encouraging broader participation. This incentivization promotes a more collaborative and inclusive AI development environment.

Choose

Federated

Learning

CerboAI designed the proof of Federated learning to incentivize and track the Edge AI Model training and the synchronization to the Global Model. Our Proof of Federated Learning is a distributed training system divided into four parts: broadcasting the server model to local models, local model training, uploading model parameters, and global model updating (Can draw a diagram) Since all information exchange is related only to parameters and not to data, it is the best ways to protect data privacy

1.Federated Learning Network

The motivation behind PoFL is to provide a new model of data ownership and sustainable incentivization/engagement for federated learning. Traditional centralized learning models often compromise data privacy and security. PoFL aims to decentralize the learning process and provide strong incentives for high-quality contributions. We anchor the user-end weight files on the blockchain and keep user data local. While protecting user privacy, this enhances the capabilities of our central model.

2.Construct PoFL Aggregate Score

We construct the POFL score based on the user's participation metrics and allocate rewards to participants in proportion to this score. ```

```

PoFL-Score_model = ∑ (γ × Improvement_global - δ × Degradation_global)

PoFL-Score_validation = ∑ (η × Correct_validation - θ × Incorrect_validation)

PoFL-Score = ∑ (Weight_stake × PoFL-Score_data) + PoFL-Score_model + PoFL-Score_validation

```

Participation metrics include but are not limited to the model contribution score and the verification accuracy score, with weights assigned to the number of file contributions.

{

"instruction": "hi",

"input": "",

"output": "Hello! How can I assist you today?"

},

{

"instruction": "hello",

"input": "",

"output": "Hello! How can I assist you today?"

},

3.Protocol Incentives

The protocol issues rewards in the form of tokens。 Rewards are distributed proportionally to the PoFL score, incentivizing high-quality data provision, impactful model contributions, and accurate validations. In the initial bootstrap phase, the focus is on increasing data volume and distribution. The protocol earns no revenue; users only pay for the minimal blockchain resources used to calculate and store their PoFL score and distribute associated incentives.

···

learning_rate_max: 5e-5

learning_rate_min: 1e-6

dataset_sample: 200

max_seq_length: 2048

clip_threshold: 10 # Limit the maximum amplitude of the data before performing sensitivity calculations or adding noise

dp_fedavg_gaussian_enabled: True

epsilon: 1 # Used to quantify the strength of privacy protection. The smaller ε is, the stronger the privacy protection is. In the context of differential privacy, ε controls the uncertainty in the algorithm output caused by adding noise.

sensitivity: 1 # The maximum impact of a single piece of data on the query or analysis results

delta: 1e-5 # The upper limit of the probability that the system allows privacy protection to fail is given

training_arguments:

output_dir: ”./output” # to be set by hydra

overwrite_output_dir: True

remove_unused_columns: True

seed: 1234

learning_rate: 5e-6 # to be set by the client

per_device_train_batch_size: 4

per_device_eval_batch_size: 4

gradient_accumulation_steps: 1

logging_steps: 20

log_level: ”info”

logging_strategy: ”steps”

num_train_epochs: 1

max_steps: -1

save_steps: 100

save_total_limit: 1

gradient_checkpointing: True

lr_scheduler_type: ”cosine”

warmup_ratio: 0.2

do_eval: False

Proof of

Federated

Learning (PoFL)

Proof of Federated Learning (PoFL) offers a novel approach to incentivizing and rewarding participants in federated learning. By combining blockchain technology, smart contracts, and a dynamic scoring system, PoFL ensures data privacy, security, and fair incentivization, fostering a robust and collaborative environment for federated learning.

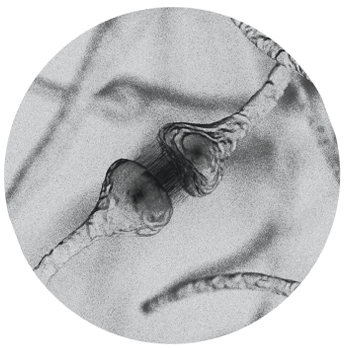

1.Latency 50ms (parameters)

A latency of 50ms for handling parameters, such as model weights or configuration settings, ensures that the system can quickly process and adjust these parameters. This low latency is vital for maintaining a seamless user experience and supporting efficient real-time processing, particularly in applications that require rapid response times, such as financial trading and real-time data analysis.

2. OnChain File 2KB

Limiting the on-chain file size to 2KB is essential for the scalability and cost-efficiency of a blockchain system. A smaller file size reduces the amount of data stored and executed on the blockchain, leading to lower storage costs, reduced bandwidth requirements, and faster transaction processing. This also translates to decreased computational resource usage and energy consumption, making the system more efficient and environmentally sustainable.

3. Training Energy Cost

Implementing high-parameter efficiency algorithms can reduce the time required for processing and adjusting model parameters. This includes utilizing low-latency communication protocols that adapt quickly to changes, ensuring rapid and efficient parameter updates. Furthermore, it can reduce the cost of model training by approximately 30% -50%, depending on the application scenario and the complexity of the training tasks.

4. Local efficiency improvement

By fine-tuning only a small portion of the parameters in a large model while keeping other parameters unchanged, the computing resources and video memory required for training are reduced. Training time may be reduced by 80% -90%, and video memory may be reduced by 50%-90%. Reduce storage requirements, further optimizing on-chain data management and ensuring the system remains lightweight and scalable.

PoFL

Technical

Indicators

With POFL technology, we have achieved new advancements in the following five directions.

Cerbo AI